The rapid proliferation of Agentic AI within the enterprise has brought credible promise, yet also significant integration challenges. As AI systems become more sophisticated and deeply embedded in business operations and line of business applications, the need for seamless, standardized connectivity to diverse data sources, processes, and operational tools has never been more critical. The Model Context Protocol (MCP) is emerging as the pivotal standard addressing this very need, promising to redefine how AI interacts with the enterprise ecosystem.

Major players like Anthropic, OpenAI, Microsoft, and Google are already championing MCP, recognizing its potential to simplify AI integration, drastically reduce development overhead, and catalyze a vibrant marketplace for AI-driven solutions. This article delves into the core tenets of MCP, its architectural brilliance, and its profound implications for the future of enterprise AI, particularly within agentic Retrieval Augmented Generation (RAG) systems.

Note: This article will not address the security considerations of MCP. It will be covered in a future part two of this series.

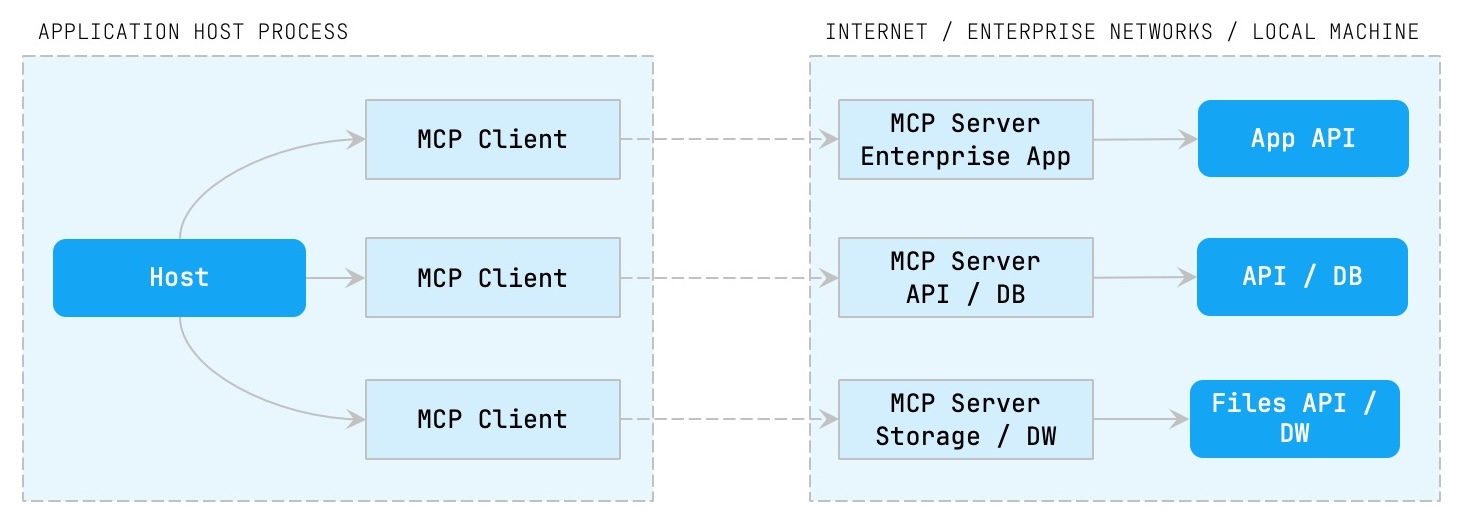

At its heart, MCP is a standardized protocol designed to enable AI systems to consistently connect and interact with a multitude of external tools and data sources. It liberates AI agents from the burden of requiring bespoke integrations for every application they need to access. This standardization is achieved through a client-server architecture, where AI tools communicate with external systems via JSON RPC 2.0.

The inherent genius of MCP lies in its ability to decouple capabilities from the AI model itself. This means that an AI model doesn’t need to be retrained or re-engineered every time it needs to perform a new function or access a new data source. Instead, these capabilities are exposed through specialized MCP servers. This decoupling fosters a dynamic marketplace where developers can create and share specialized MCP servers for various services, accelerating innovation and deployment.

This modularity empowers organizations to extend the functionalities of their AI applications with new capabilities without the need for extensive model retraining or the development of custom API integrations for each new tool.

Most sophisticated RAG systems currently in production incorporate some degree of “agency.” This agency often manifests in the intelligent selection of data sources for retrieval, particularly when dealing with a multitude of disparate data repositories. MCP significantly enriches the evolution of these agentic RAG systems, especially in scenarios involving diverse data types:

The future trajectory of MCP installations is poised to mirror the ubiquitous mobile app store model. Just as Apple and Google dictate the visibility and approval of mobile applications, LLM clients will increasingly control which MCP servers are surfaced, promoted, or even permitted within their ecosystems. This will create a competitive landscape where companies vie for premium visibility and distribution for their specialized MCP servers, transforming MCP directories into high-stakes distribution platforms.

The Model Context Protocol represents a paradigm shift in how enterprises will integrate and scale their AI initiatives. By offering a standardized approach to connecting AI with diverse tools and data sources, MCP dramatically simplifies development, fosters innovation, and unlocks unprecedented possibilities for AI-powered applications across all domains. For IT decision makers, understanding and strategically embracing MCP is not merely an advantage; it is a critical imperative for building resilient, agile, and powerfully intelligent enterprise systems.

Explore more perspectives and insights.